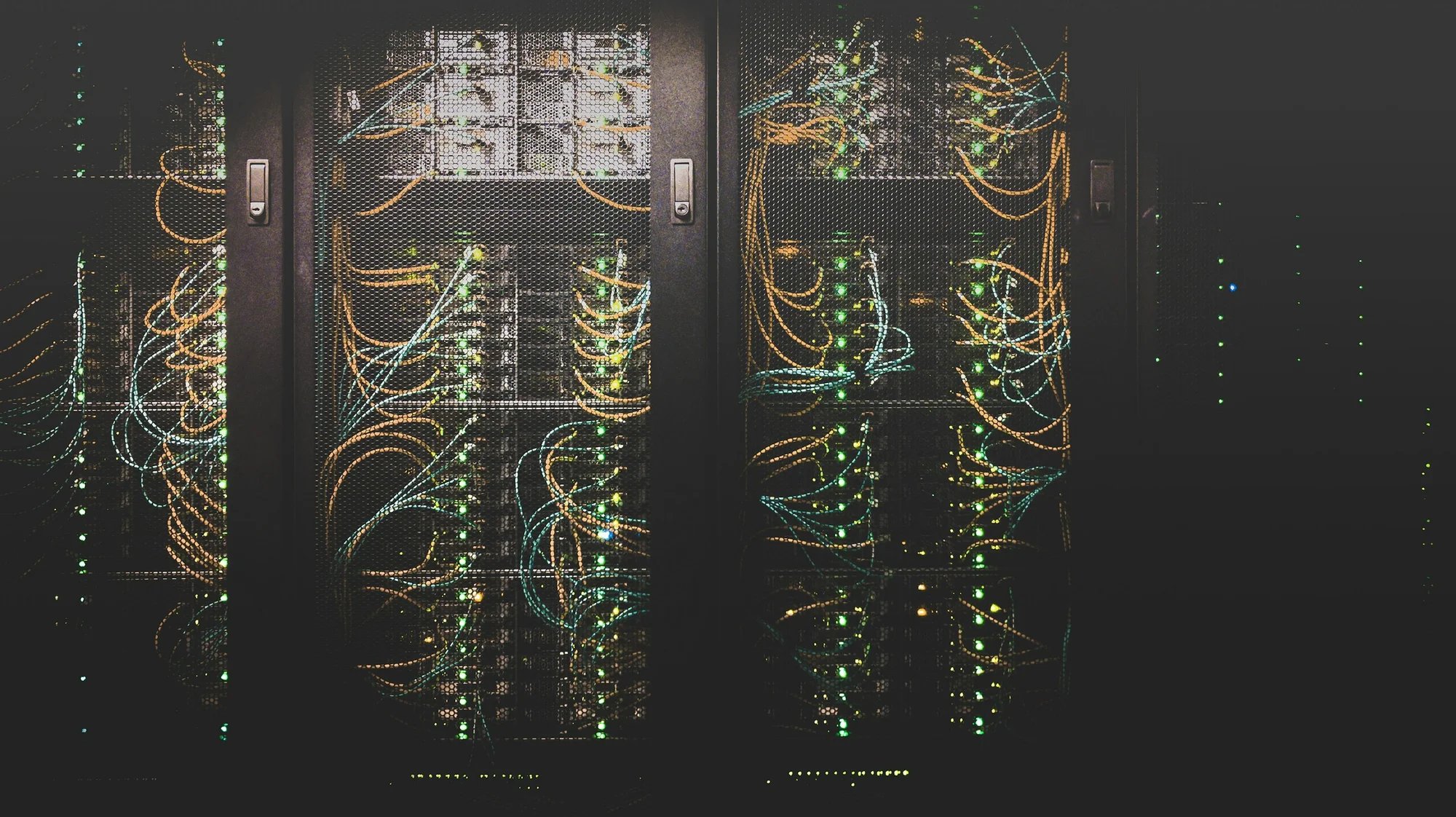

Managed AI Hosting

Production-grade infrastructure for AI models with GPU optimization, monitoring, and scaling.

Infrastructure Features

GPU-Optimized Hosting

NVIDIA A100, H100, and L4 GPUs optimized for inference and training workloads.

Auto-Scaling

Kubernetes-based auto-scaling handles traffic spikes and optimizes resource usage.

Model Versioning

Deploy multiple model versions with A/B testing, rollback, and canary releases.

Security & Compliance

Encrypted data pipelines, SOC 2 compliance, and private cloud deployment options.

What We Support

Large Language Models (LLMs)

Computer Vision Models

Speech Recognition & TTS

Custom PyTorch/TensorFlow Models

RAG Pipelines & Vector DBs

Real-time Inference APIs

Simple, Transparent Pricing

All plans include: SSL certificates, DDoS protection, automated backups, and 99.9% uptime guarantee